- Research

A research team at the INRS puts artificial intelligence at the service of COVID-19.

INRS researchers are combining their expertise to develop a platform using artificial intelligence (AI) for automated voice analysis that would capture sounds associated with the voice of a person infected with COVID-19. Photo : Adobe Stock

Testing for COVID-19 has become a mandatory step for more and more travelers but also a diagnostic tool and preventive measure for many of us. It is done by taking a swab at the back of the throat and nose or by gargling. But it could soon be done by a simple analysis of the sound of a cough. A non-invasive and inexpensive alternative that could benefit the healthcare community.

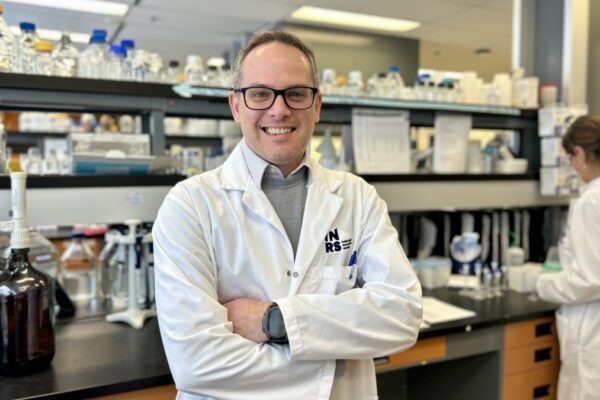

In their laboratories at the Centre Énergie Matériaux Télécommunications of the Institut national de la recherche scientifique (INRS), professors Roberto Morandotti and Tiago H. Falk are combining their expertise to develop a platform using artificial intelligence (AI) for automated voice analysis that would capture sounds associated with the voice of a person infected with COVID-19.

“Voice recognition has been around for several years with applications like Siri or Alexa. AI can already detect and process variations in breathing sounds, but we think we can go further and try to capture patterns in the sounds that are not detectable to the naked ear.”

Roberto Morandotti, Canada Research Chair in Intelligent Photonics.

Artificial intelligence at the service of COVID-19

Patients with COVID-19 typically develop respiratory symptoms that manifest as shortness of breath, cough or sore throat, as well as a documented increased muscle fatigue. Therefore, AI-based approaches using speech and cough, as well as other auditory modalities such as breathing, have shown great potential in this area.

“It is a combination of symptoms that helps AI algorithms detect different diseases. Each parameter extracted from the audio signal characterizes a certain symptom, and when taken together, they can provide strong evidence of COVID-19 infection.”

Tiago H. Falk, an expert in multimodal signal processing and applied AI.

Unlike conventional testing methods, audio systems would have the advantage of being non-invasive, inexpensive, and providing real-time results, making them an ideal tool for remote diagnosis and monitoring of COVID-19. More importantly, however, “developed solutions need to be interpretable in order to be adopted in the clinic,” adds Professor Falk.

The benefits of photonics

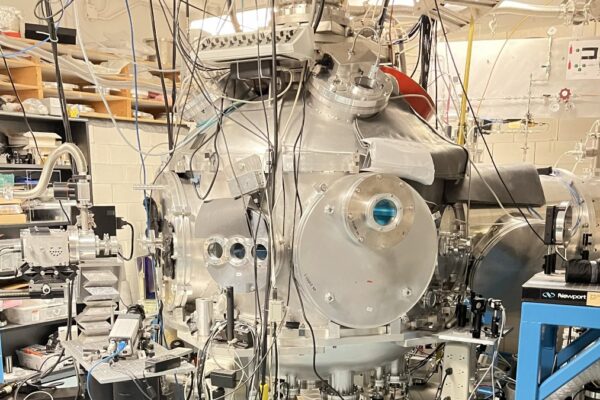

Given the limitations of the electrical signal in modern computers, the researchers chose to use photon-based optical signals, which has a high data transmission speed.

By combining photonics with electronics, we are able to improve the processing capacity of massive data, such as human voices and all the diversity of sounds associated with them,” explains Professor Morandotti. This allows us to train our platform with thousands of voice samples from people who may or may not have COVID-19.

The work will be done in several stages. First, the team will develop the photonics-based platform for automatic and highly accurate analysis and classification of voice and breath sounds. Second, more interpretable and clinically-relevant parameters will need to be extracted from the acoustic signals in order to provide the AI algorithm with additional intelligence and inputs. They will then investigate the socio-ethical implications of using such technology in the medical field. The final step will be the application of the platform for the detection of COVID-19, in order to accelerate and improve access to screening, particularly in remote and sparsely populated areas of Quebec, where access to screening may be limited.

The objective is to develop an audio-based diagnostic system for COVID-19 that is accessible to all, can be installed on existing devices, and is protected from cyber-attacks to respect data confidentiality.

This project will combine the expertise in multimodal signal processing and applied AI for health applications of Professor Falk’s team, the expertise in smart photonics of Professor Morandotti’s team, and the expertise in AI ethics, accessibility and privacy of Professor Carolyn Côté-Lussier from the Urbanisation Culture Société Research Centre.

Beyond COVID-19, this technology could be applied to other pathologies. For example, Professor Falk’s research team has already worked on audio-based AI algorithms for depression, dysarthria, and autism spectrum disorders detection, which could benefit from the photnics-based platform. “The changes in patterns of our voice vary depending on the combination of symptoms, ranging from lung cancer to the common cold. It is as if each symptom had its own signature,” concludes Professor Roberto Morandotti.